Market Design - Continuous Scaled Limit Orders

Pt. 1 - Krypton Protocol & Fully Continuous Trading

Intro

Permissionless financial innovation will prove a massive unlock for the vast treasure trove of academic literature detailing alternatives to the way trading has traditionally been performed. Incumbent exchanges in traditional finance such as the NYSE, CME, Nasdaq, CBOE, etc, had no incentive to disrupt the status quo, especially considering that a large proportion of their revenues derive from volume generated by HFTs. New entrants with arguably superior market designs could hardly compete with incumbents given the challenge of 1) bootstrapping initial liquidity/volume, 2) immense startup hardware requirements such as cables and computers, and 3) enormous legal costs necessary to navigate a convoluted regulatory landscape. Decentralized finance has upended that paradigm.

Today’s post covers Krypton Protocol, the most unique decentralized exchange that I’ve personally come across.

Why is market design important?

First, let’s state with utmost clarity the foundational problem in trading that Krypton endeavors to solve. In today’s market structure, low latency traders use their speed to exploit time priority and be the first in the order queue. In traditional financial markets, this dynamic may manifest as HFTs picking off slower traders by lifting stale bids and asks before they’re cancelled. In crypto, this speed advantage may materialize as large orders being front-run by MEV bots monitoring the memory pool. In both cases, the price execution of uninformed traders is critically damaged, without any meaningful contribution to efficient pricing.

What is wrong with this model and why does it simultaneously represent a significant opportunity? First, assume that “exploiting short-term market microstructure effects does not contribute to price discovery because no new information about an asset’s fundamentals is uncovered” (Krypton light paper, pg. 3). Therefore, no economic justification exists for the execution damage that uniformed traders incur and the wealth transfer to low latency traders that occurs at their expense. It follows that the existence of HFTs is counterproductive to humanity’s progression since resources allocated towards supporting economically unjustifiable activities represent a deadweight loss to society.

The following situation should add some color to what has so far been a strictly theoretical argument. In 2010, Spread Networks spent $300m laying a high-speed underground fiber optic cable between NYC and Chicago to cut data transmission time from 16ms to 13ms (Budish, Cramton, & Shim). Industry experts collectively exclaimed that 3ms is an “eternity” in HFT time and any MMs excluded from the line would be put out of business. Unsurprisingly, Spread’s cable is already obsolete after microwave networks cut transmission time to just ~8ms… and this was all the way back in 2014.

Setting aside the economic burden angle, more practically, large asset managers lose money when they trade in large amounts due to low-latency traders exploiting microstructure effects. Without the vast body of uninformed traders hungering for improved execution, it is impossible to underwrite Krypton’s thesis. In Fischer Black’s 1971 journal article, Toward a Fully Automated Stock Exchange, the godfather of mathematical finance accurately predicted that large asset managers would employ execution algorithms to minimize the price impact of their trades.

“An investor who is buying or selling a large amount of stock, in the absence of special information, can expect to do so over a long period of time at a price not very different, on average, from the current market price.”

Krypton’s flow trading would allow institutions to execute this strategy without incurring large bandwidth costs associated with repeatedly placing, modifying, and canceling thousands of orders throughout a single day.

Krypton’s vision

Individuals well-versed in the literature around market design can generally be placed into two categories. 1) Those who believe toxic trading (and therefore MEV) is inevitable and we must strive to limit its negative externalities, and 2) those who believe toxic trading is but an artifact of current market design and there must exist a superior economic alternative. I find that an academic could reasonably subscribe to the first ideology. An early-stage venture investor on the other hand cannot. A VC’s core function involves identifying convex opportunities and investing against entropy. Concepts aligned with ideology 2 are likely more asymmetric than those aligned with ideology 1. Put simply, VCs subscribed to ideology 1 appear to suffer from lack of imagination.

Along those lines, Krypton’s novel exchange architecture represents a completely new paradigm in applied market design. Every week I come across a new project attempting to limit impermanent loss through some complicated mechanism, or popularize a minor variation on the constant product curve, or reduce MEV through a commit-reveal scheme, or some other blockchain-specific technical approach to optimal execution. Krypton’s purely economic solution to toxic trading dominates comparably paltry projects by addressing the root cause of execution inequity: discreteness in price, quantity and time. In short, Krypton promises to liberate uninformed traders from the shackles of time priority through fully continuous trading.

How it works

Conceptually, Krypton’s advanced limit orders communicate an offer to buy or sell some asset at a specified rate within a specified price range. Essentially, by making price, quantity, and time fully continuous, high speed traders can only exploit uninformed traders on a very limited proportion of their entire order. Furthermore, by eliminating time priority, Krypton makes it so that low latency traders compete primarily with other low latency traders, therefore disincentivizing deadweight loss investments in maintaining a technology edge.

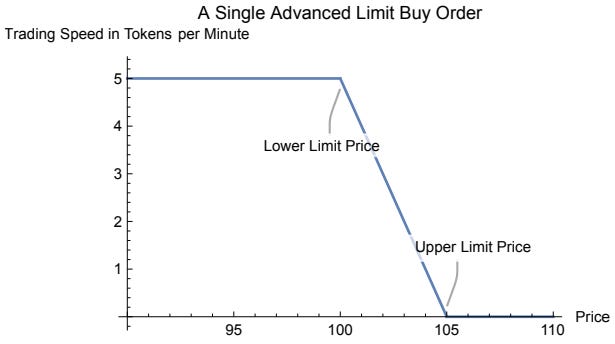

Krypton’s fundamental building block is the advanced limit order, consisting of a trade direction, the number of tokens to be traded, a maximum trading speed, and a lower/upper limit price. See the below explanation from Krypton’s light paper for further explanation.

“An advanced buy order executes at its maximum speed below the lower limit price and does not trade at prices above the upper limit. In between these limits, the trading speed is linearly interpolated. An advanced limit order to sell works the other way around. It executes at the maximum speed for prices exceeding the upper limit and at zero speed for prices below the lower limit. Trading speed is linearly interpolated in between the upper and lower limits.”

Again, Krypton’s design practically eliminates the advantages of high speed traders because price is connected to trading speed rather than quantity. Therefore, the quicker a traders buys, the higher they will move the price at which their own order executes. I’ll add, the relationship between speed and price in Krypton highlights a fundamental misalignment of incentives in the design of CLOBs. Given that high trading speed signals the intention to exploit a short term information advantage, it’s quite counterintuitive that said speed should benefit rather than damage an informed trader’s execution in the current trading paradigm.

Consider the following example demonstrating how front-running becomes impractical on Krypton.

An uninformed trader initiates swap X at price P1 in the diagram below, and a MEV bot places a buy order before swap X in the same block pushing the equilibrium price to P2.

To clarify, because Krypton interprets blocks as singular points in time and the inter-block period as a period of time, the front-runner actually increases the execution price for themselves as well as the trader being front-run.

To exploit swap X, the front-runner would need to sell what they purchased back to the trader being front-run. This would require a high trading speed that may raise the execution price above the upper limit specified in swap X, at which point swap X would remain unexecuted until the front-runner’s transaction was complete.

Instead, if the execution price is still within the range specified in swap X the order would execute at P2 until the front-runner’s trade was completed, at which point swap X’s execution price would return to the previous equilibrium price P1 for the remainder of the order.

In order to sell the quantity back rapidly, however, the front-runner would push the supply curve up and to the left, implying the equilibrium price at sale, P3, would be less than at purchase. Therefore, this series of transactions would represent a net loss (P3 - P2 < 0).

A final point - the computation required to calculate the equilibrium price on Krypton exceeds the block gas limit on Ethereum with just 200 bid and ask orders. To address this issue, Krypton leverages Chainlink’s decentralized oracle network to run their matching engine off-chain but perform settlement on-chain.

Why it’s better than alternatives

Any mechanisms by which digital assets are exchanged and any attempts at minimizing/redirecting/manipulating MEV or optimizing execution can be characterized as competitors to Krypton. I’ve narrowed this list to four sufficiently relevant yet disparate projects that are worth addressing in detail.

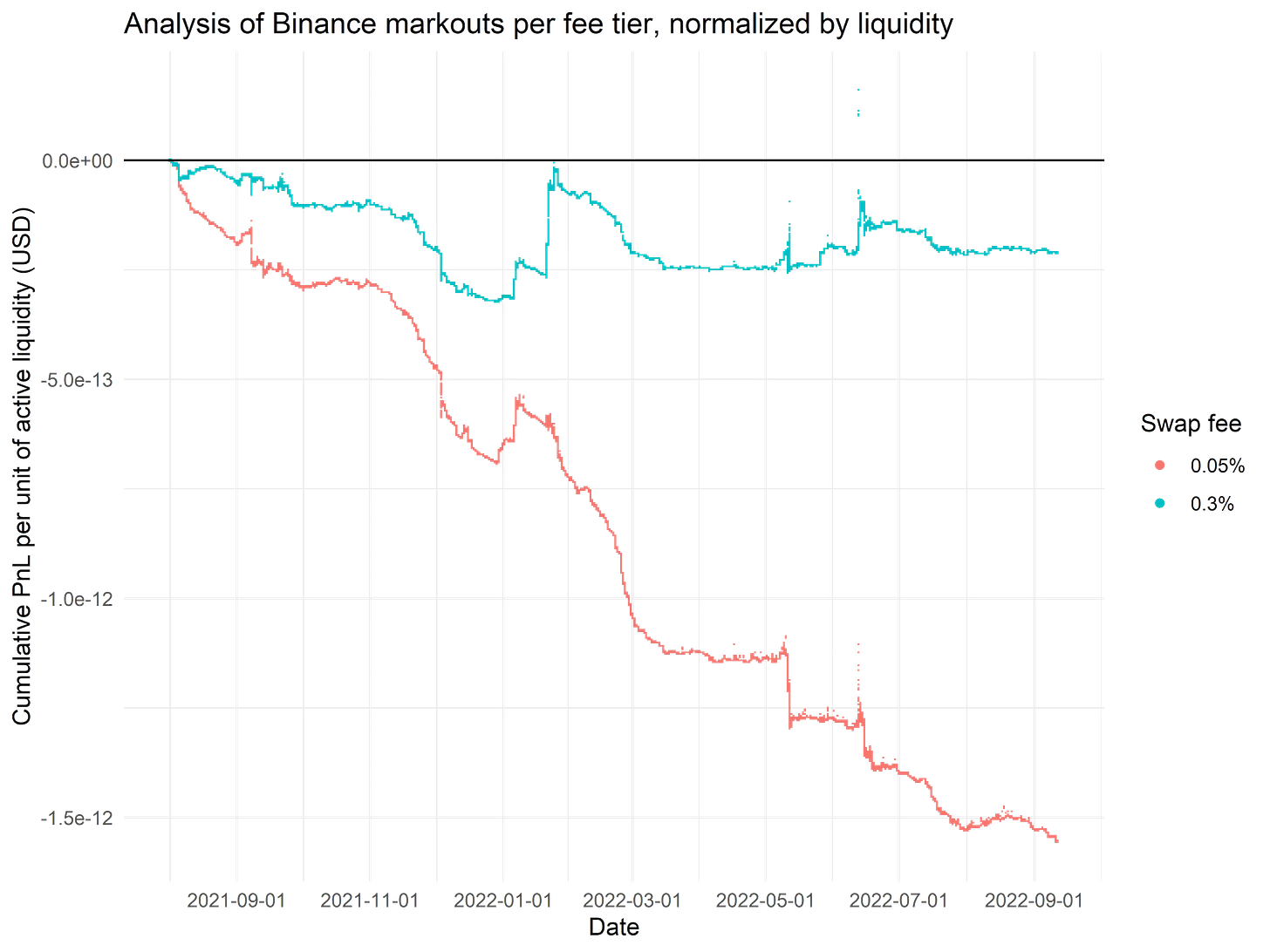

Uniswap need not require a detailed description, however, recent debate across crypto twitter regarding LP profitability serves to highlight the fundamental weaknesses of AMMs. They’re too simplistic by design; willingness to be the counterparty to any trade inevitably leads to systematic loss against informed arbitrageurs. Yet without cross-exchange arbitrage, efficient pricing would be absent given the inability for AMMs to independently facilitate price discovery. Limited work had been done to quantify the extent of this necessary systematic loss until quite recently. See below what I find to be the most rigorous estimate of LP impermanent loss from the team at Crocswap. This particular graph likely boasts the most sensible combination of parameters: Binance prices as a reflection of fair value, PnL normalized by Uniswap V3 active liquidity, a 5 min markout period, and distinct plots for distinct fee tiers.

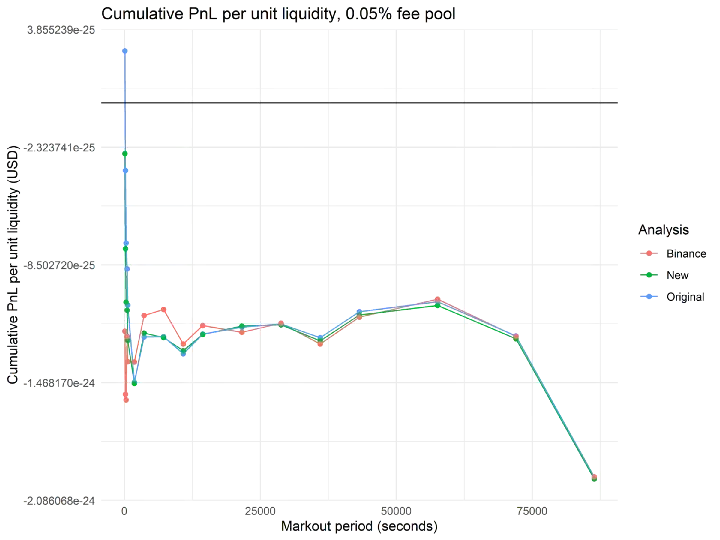

First, some explanation as to why I believe these particular parameters appear most sensible. Assume market making profit can be defined as follows: profit = (P_e - P_f)*d*q, where P_e is the execution price of the swap, P_f is the asset’s fair price, d is the swap direction, and q is the quantity of the base token (Alex Nezlobin). The fair price of an asset could be defined as the mid-price of the pool some time Δ later, however, CEX prices time Δ later represent an even better proxy given AMM-based DEXs typically lag their centralized counterparts in reflecting an asset’s fair price. This is simply because a rational actor should only perform arbitrage if their profit exceeds the AMM’s fee. Therefore, using a Binance price feed makes a lot of sense here. Additionally, one could debate what the optimal markout period is, however, the plot below (again from the CrocSwap team) indicates that provisioning liquidity for the .05% fee tier is consistently unprofitable after 8 minutes. My hunch is that retail LPs on Uniswap are not immediately hedging deltas and rebalancing etc.

One final point on Just-In-Time (JIT) liquidity. Some believe JIT liquidity is the only mechanism by which AMMs can sufficiently reduce slippage to compete with order books. This view ignores or underestimates the 2nd order effects of disincentivizing passive liquidity provisioning, specifically, the damage to price discovery. Taking their argument to its logical conclusion, an AMM without any passive liquidity provisioning would resemble a deranged RFQ-esque thing where traders send their orders into the ether (pun intended) and hope for a response. Given passive LP’s apparent unprofitability and the potential for JIT liquidity provisioning to scale, I hesitate to echo the industry-wide mantra that AMMs, at least in their current form, represent the future of decentralized finance.

Flashbots has, through MEV-geth, virtually eliminated PGA-induced network congestion by taking blockspace auctions off-chain, and reduced incentives for consensus producers to centralize and integrate with MEV searchers. Besides lower gas fees, however, their products have not actually improved execution. In fact, by essentially open-sourcing the gas auction process, Flashbots slashed the likelihood of future integrated miner-searcher monopolies in exchange for opening the proverbial MEV floodgates. A necessary sacrifice I’d argue. Centralization of consensus producers proved a considerable threat to Ethereum’s vision for global financial sovereignty and required an immediate solution. Much like a bandage covering a severed limb, however, MEV-geth may stop the bleeding but it won’t ensure against continued infection and eventual death.

In regard to MistX and SUAVE (Single Unified Auction for Value Expression), my general criticism is that both propose solutions involving a centralized layer of some form. While MistX indeed ensures pre-confirmation privacy, it does so at the expense of Ethereum’s transparency and decentralization guarantees. Similarly, SUAVE suggests replacing potential incentive-driven builder centralization with actual Flashbots-controlled sequencing centralization, at least initially. Here it’s important to note that the proportion of total cross-domain MEV derived from arbitrage between centralized exchanges and Ethereum AMM’s likely far exceeds that of cross-chain arb (credit to Alex Nezlobin again for this insight). Therefore, unlike MEV-geth which addressed the threat posed by consensus producer centralization, SUAVE doesn’t appear like a favorable trade-off for stakeholders within the Ethereum ecosystem. I find lines like the following somewhat validate this concern.

“We can leverage that credible neutrality to get many parties to share their views, strategies, and opinions in a single place, giving SUAVE an information advantage on centralized builders.” - Flashbots SUAVE announcement

A few concluding thoughts: 1) Flashbots is a venture-backed startup so they must eventually transition (or already have) from a research entity building public goods to a company generating real cash flows, and 2) Flashbots products are redundant if MEV is somehow eliminated, so they have no incentive to address toxic trading at the root (time priority). Implicit within the long term value prop of Flashbots lurks the assumption that toxicity is part of the very fabric of trading, rather than an artifact of current market design. As previously noted, I find that this view lacks the optimism or creativity one would expect from those building the future of finance.

CowSwap represents a meaningful improvement in the optimal execution landscape but possesses a few core limitations that I believe will hinder it’s adoption by institutions. At a high level, the protocol claims to prevent adverse selection / front-running by matching trades within batches every 30 seconds (otherwise known as a Coincidence Of Wants). Trades that are not matched within a batch before their user-assigned expiration, however, are routed elsewhere for immediate execution based on the current best price across other exchanges and aggregators. Therefore, CowSwap does not represent a fundamentally differentiated trading venue, rather it functions as a meta-aggregator of sorts. Additionally, the protocol’s design admits a considerable tradeoff between expected execution price and time to execution. For instance, in the hopes of having one’s order be matched within a batch and avoiding AMM fees/slippage, one should specify a later expiration. A later expiration, however, also risks adverse price movements before execution. On the other hand, an earlier expiration risks directing one’s order to Uniswap where it’s front-run as usual.

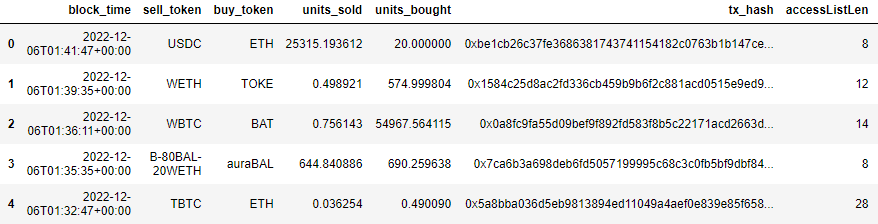

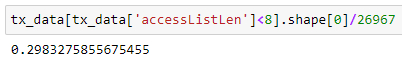

To better assess CowSwap’s utility, I wrote some code to estimate the proportion of orders that are actually matched within a batch. My methodology can be summarized as follows: 1) I pulled all transactions from the past month (11/5-12/5) that interacted with CowSwap’s GPv2Settlement contract, 2) I counted the total addresses in the access list specific to each transaction hash, and 3) I observed that transactions routed elsewhere almost always included access lists containing more than 6 contract addresses. There’s probably a better methodology or a number already out there on the internet somewhere if I had to guess. Regardless, the upper limit (29%) is almost certainly an overestimate, especially given that the period includes the entire FTX debacle and associated volume increase leading to more frequent coincidences of wants. TLDR, only ~20% of of CowSwap orders are executed in batch.

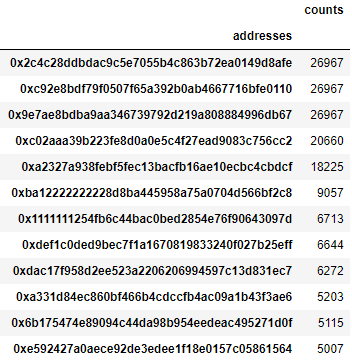

The following lists the addresses that appear most frequently within CowSwap transaction access lists. The first three addresses are other CowSwap contracts while the next two are WETH and USDC token contracts. Somewhat surprisingly, CowSwap routes 33.6% (9057/26967) of all orders at least in part through balancer as evidenced by the 6th most frequently occurring contract (0xba12…). This is due to a particularly tight, gas efficient integration according to CowSwap technical lead Felix Leupold. Furthermore, 25% (6713/26967) of orders are routed through 1inch (0x1111…), 24.6% (6644/26967) touch 0x (0xdef1…), and 18.5% (5007/26967) hit Uniswap V3 directly (0xe592…).

I’ll conclude with a few high level thoughts on frequent batch auctions. The general assumption is that FBAs should reduce the profits of fast traders since the quantities and execution prices of orders arriving within the batch interval are independent of when exactly they arrived. Overall, my impression of them is currently lukewarm, but still developing. I think the CitSec Market Lens report on the TWSE’s shift from FBAs to a CLOB makes a few compelling arguments for continuous trading, but also likely overstates the improvements to order book depth. Lower bid-ask spreads and higher trading volumes post-transition are intuitive findings given the tradeoffs between MM activity and batch length. On the other hand, the 2x increase in aggregate order book depth is likely a function of the acute uptick in order cancellation rates since HFTs leverage their speed to cancel stale orders before they’re picked off. Pete Kyle, market microstructure’s only hope for an eventual Nobel, has written on FBAs at some length. I’ll plan to unpack his theoretical argument against them in Toward a Fully Continuous Exchange and his subsequent reversal in Flow Trading, co-written with the authors of the original FBA paper, in the next issue of this series on market design. On to a super brief description of Rook Protocol.

Rook is an open settlement protocol that improves execution by returning MEV to uninformed traders. They incentivize a network of keepers, or MEV arbitrageurs, to coordinate and capture on-chain profits before efficiently re-directing a portion to the traders that generated them in the first place. Rook’s approach is actually quite impressive and I’ve been somewhat surprised that they haven’t had further traction despite fantastic execution on certain kinds of swaps. Despite being a proponent of Rook, I believe Krypton remains a superior solution to toxic trading given that their approach is free from the potential scalability issues associated with growing and incentivizing a network of keepers to voluntarily forgo a proportion of their MEV profits.

Looking ahead

After considerable bluster, I’ll cede that truthfully there’s no guarantee Krypton will work exactly as we expect. Will market makers be disincentivized from providing sufficient liquidity? Will price discovery be fast enough? Is there a way to game the advanced limit order that we haven’t considered? These things remain to be seen. What I do know is that Krypton possesses the most unique exchange design I’ve seen and that excites me. I hope this proved a relatively informative read. Looking ahead, the next issue will go in depth on frequent batch auctions and the following will likely be an overview of the on-chain options landscape.